Why Another Headless Browser?

Open-Data is Still Out of Reach

The goal of SecretAgent is to move the world toward data openness. We believe data openness is essential for the startup ecosystem and innovation in general.

We've seen significant tooling in scraping over the last several years (i.e., Puppeteer, mitmproxy, Diffbot, Apify, etc), but too much of it is closed source and/or not directly aimed at scrapers.

We want to make it dead simple for developers to write undetectable scraper scripts.

Existing Scrapers are Easy to Detect

Did you know there are 76,697 checks websites can use to detect and block 99% of existing scrapers?

We created a full spectrum bot-detector that looks at every layer of a web page request to figure out how to differentiate bots from real users using real browsers.

SecretAgent can fully emulate human browsers at every layer of the TCP/HTTP stack. Out of the box, the top 3 most popular browsers are ready to plug-in.

Writing Scraper Scripts Is Too Complicated

Puppeteer was a big improvement in interacting with modern websites, but introduced a subtle mess: the browser is a fully separate code environment from your script. You can access the power of the DOM, but you can't write reusable code to do so.

import extractor from 'smart-link-extractor';

// ...load page

const extractedLinks = await page.evaluate(function() {

const links = document.querySelectorAll('a');

// ERROR! Not available

return extractor(links);

});

SecretAgent lets developers directly access the full DOM spec running in a real browser, without any context switching.

Use the DOM API you already know:

import extractor from 'smart-link-extractor';

// ...load document

const links = await document.querySelectorAll('a');

const extracted = extractor(links);

Debugging Scrapers is Soul Stealing

Your script stopped working. Was it because of a website change, a single network hiccup, a captcha, a bot blocker?

If you've ever tried to debug a broken script, you've run into this wall. Once that single failure is gone, it's very hard to get back and figure out how to work around it for the next time.

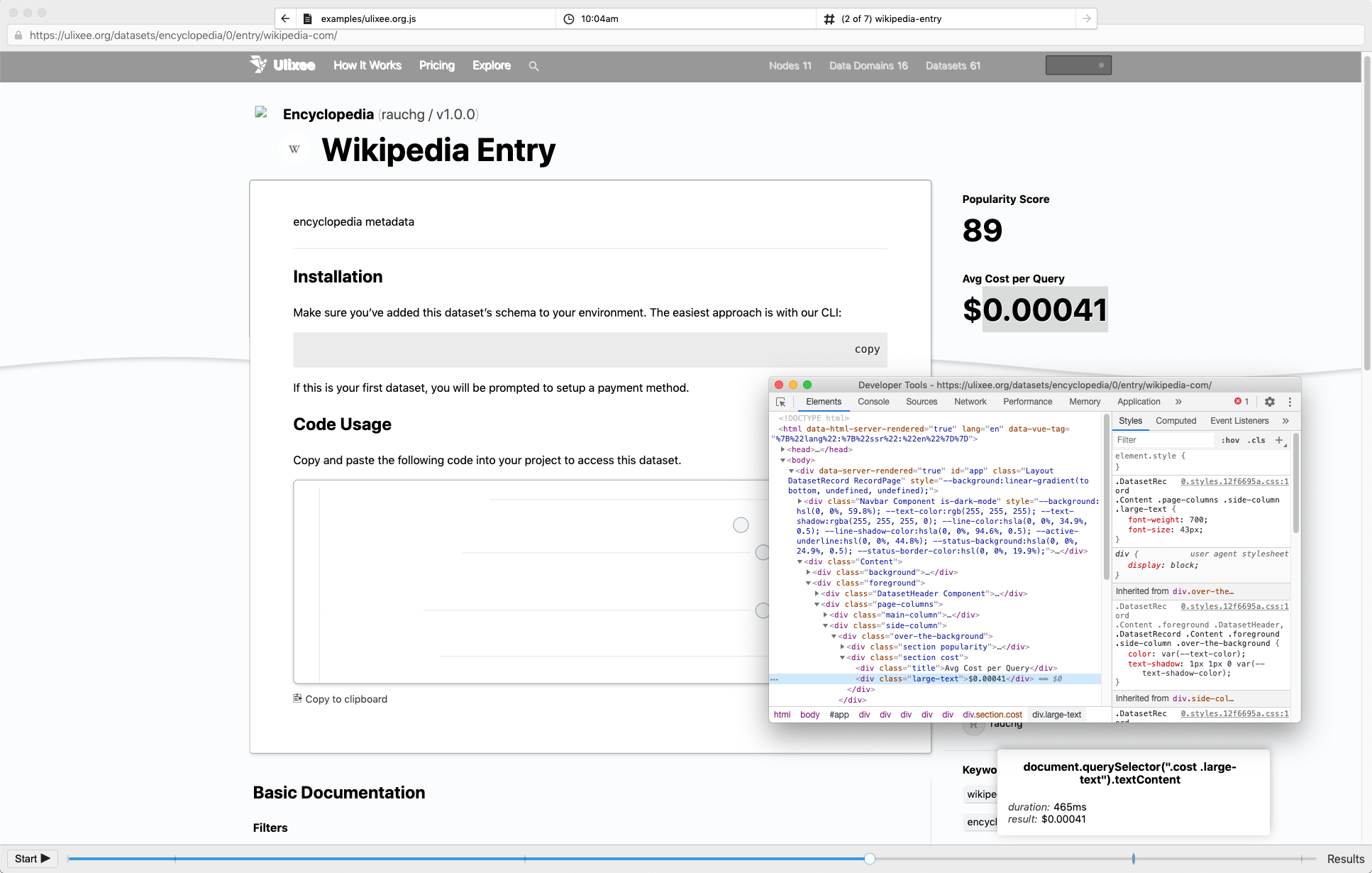

SecretAgent comes with Replay - a high fidelity visual replay of every single scraping session. It's a full HTML based replica of all the page assets, DOM, http requests, etc. You can pull up the Replay agent and watch until the script breaks.. then fix it inside Replay until you're back up and running.